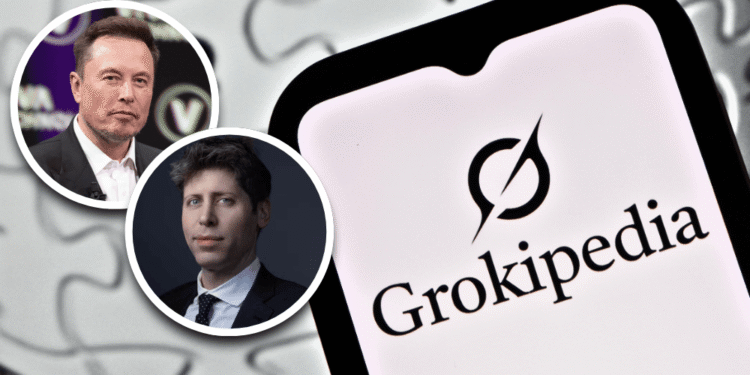

An AI-generated encyclopedia developed by xAI is drawing attention after appearing in citations produced by large language models, highlighting a shift in how machine systems source factual information.

Grokipedia, launched in October, is written and updated entirely by artificial intelligence. The platform does not allow direct human editing. Entries are generated, revised, and expanded automatically in response to queries, positioning the site as a continuously updating reference system rather than a community-edited encyclopedia.

Reporting by the Guardian found that GPT-5.2 cited Grokipedia nine times across more than a dozen test prompts. The citations appeared mainly in responses to obscure or highly specialized questions, including inquiries involving Iranian political structures and historical legal disputes. The report noted that Grokipedia did not surface in prompts tied to widely debunked claims or topics with extensive public fact-checking.

The emergence of Grokipedia in AI citations has fueled discussion over how authority is established in machine-generated answers. Unlike traditional encyclopedias that rely on volunteer editors and layered moderation, Grokipedia operates through a single automated pipeline. Updates occur immediately as new information enters the system, without editorial review cycles or consensus-building among contributors.

An OpenAI spokesperson told the Guardian that ChatGPT’s web search is designed to draw on a broad range of publicly available sources and perspectives. The spokesperson said the company applies safety filters to reduce the risk of surfacing links associated with serious harm and that ChatGPT clearly displays the sources informing its responses through citations. OpenAI, they added, continues to run programmes aimed at filtering out low-credibility information and coordinated influence campaigns.

Grokipedia has faced criticism over disputed claims on politically sensitive issues, including same-sex marriage and the January 6 US Capitol riot. Supporters of the platform argue that removing anonymous human editors reduces internal bias disputes and places responsibility for accuracy on the model itself and its training data.

As AI systems increasingly function as gateways to information, the appearance of AI-native encyclopedias in citation pipelines signals a broader change in how knowledge is produced and consumed. Grokipedia’s growing visibility reflects demand for fast-updating reference material designed for machine use, even as concerns over verification, oversight, and public trust continue to surround AI-generated sources.